My final project for Alt-Docs proposes a web-doc about the subway as a place and space, as a character.

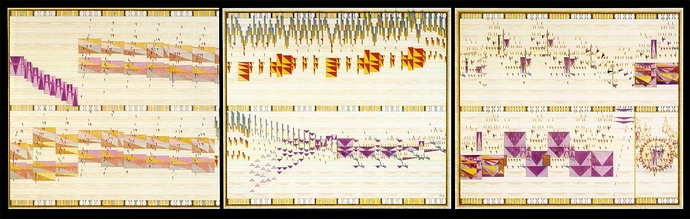

A subway train often exists between contexts (the stations) and acts as a context itself for the activity that takes place. When between stations, there’s a calmness within the subway car, but once in station and with its doors open, the subway car undergoes a reshuffling.

In relation to the stations, the train connects these fixed and disparate world.

The movement between these spaces is largely devoid of context, often travelling in tunnels without lights or changing scenery which typically orients people through travel. In this way, the train car and its people are simultaneously the context and the characters.

This external movement of the train between stations is juxtaposed with the internal stasis and consistency. The people are a step removed from movement: the train is moving but they are stationary. They’re also isolated from the external city as a context. In alternative modes of transportation such as riding the bus or walking, people physically see the change in location.

Manifestation

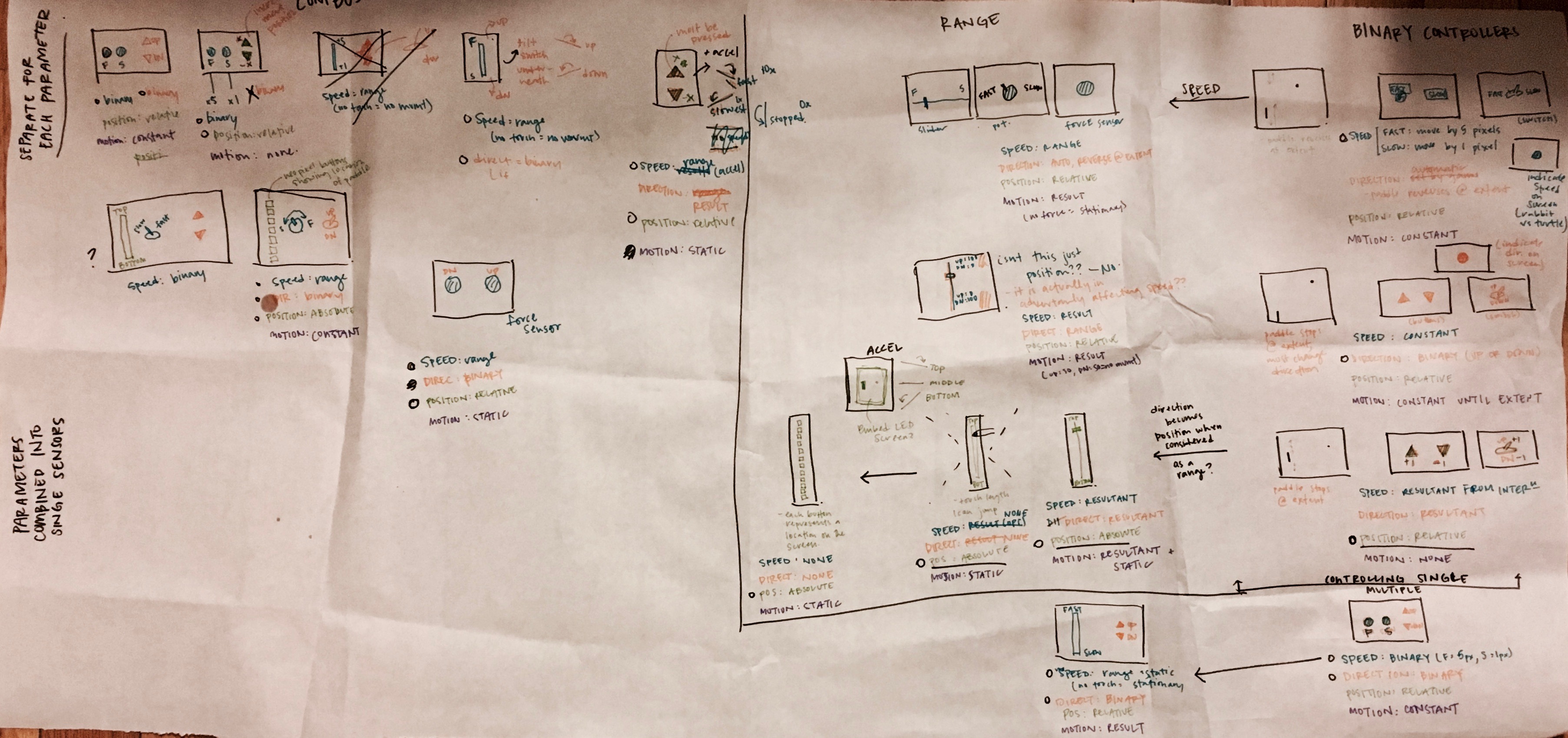

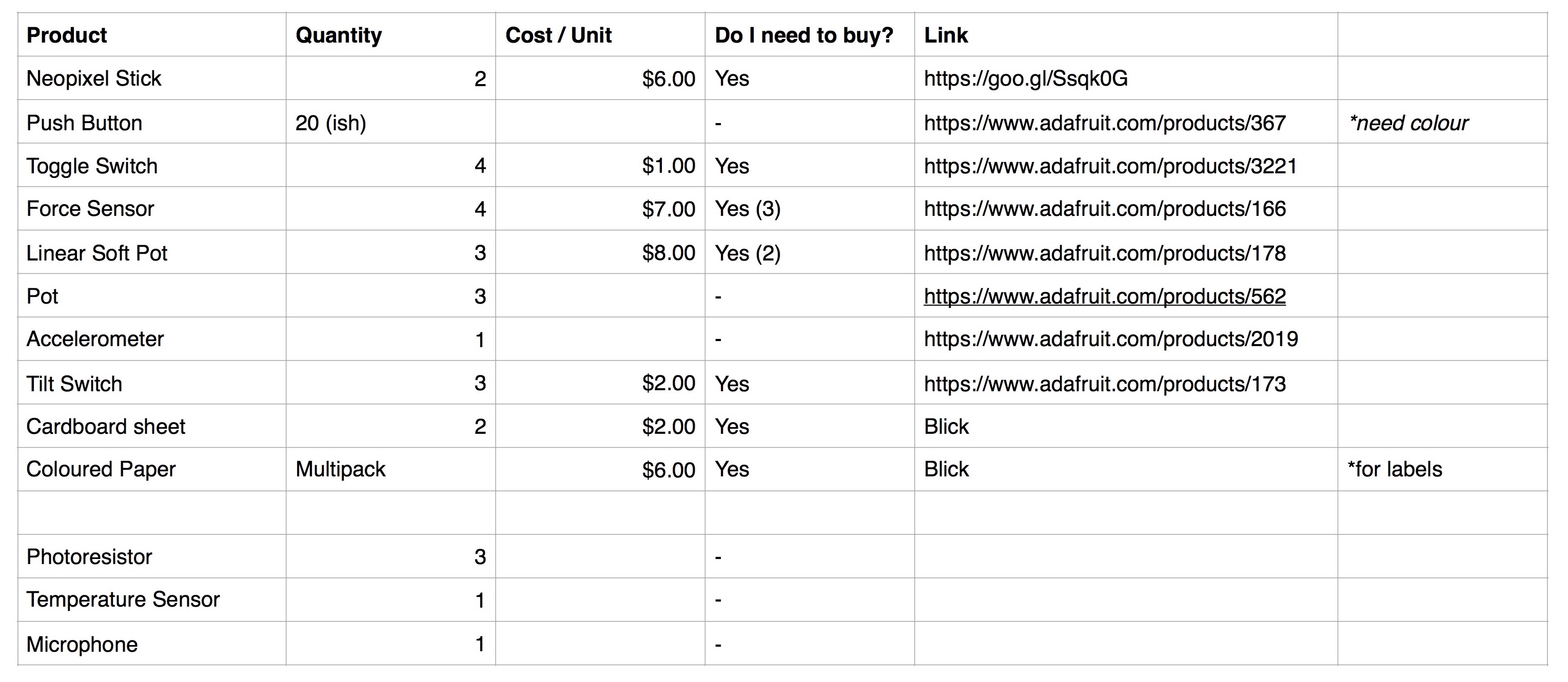

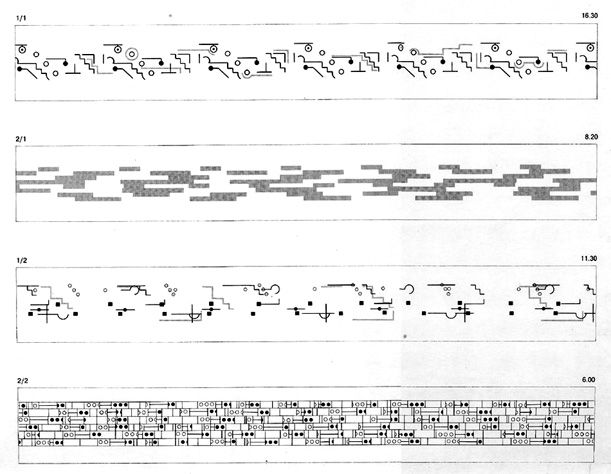

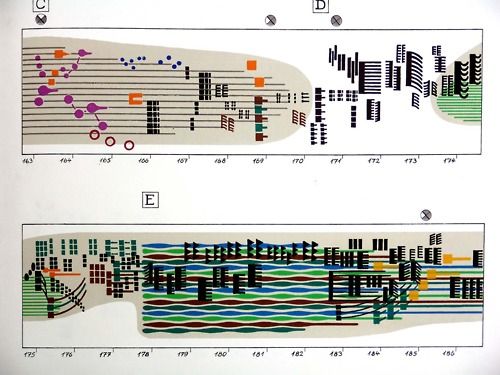

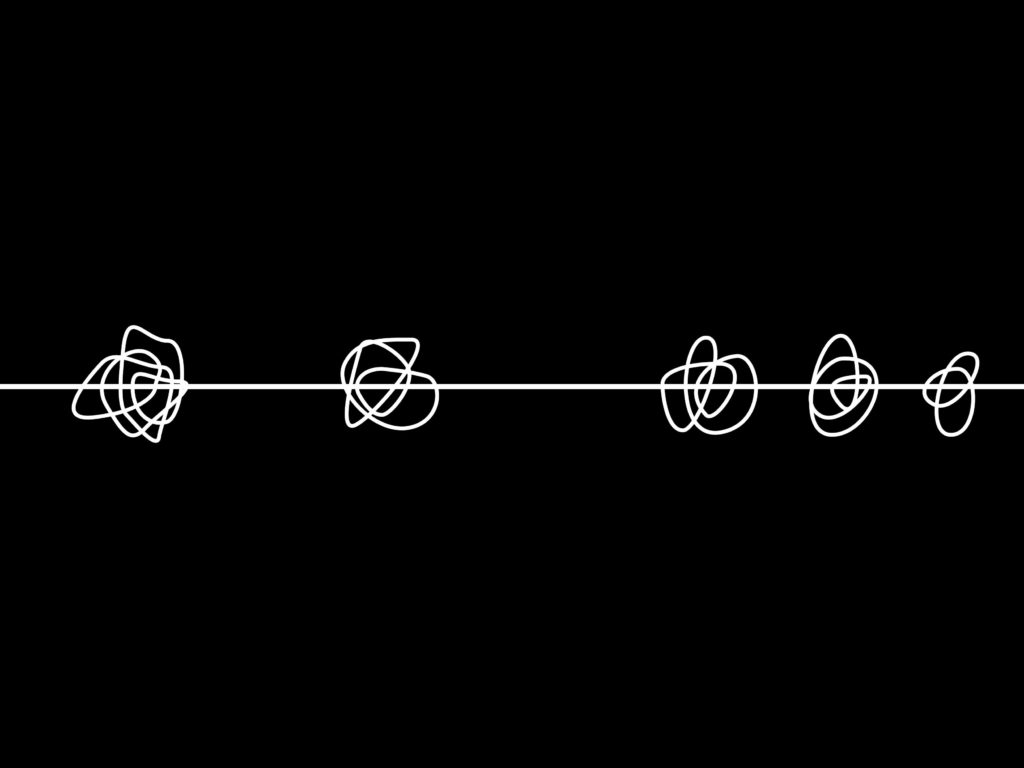

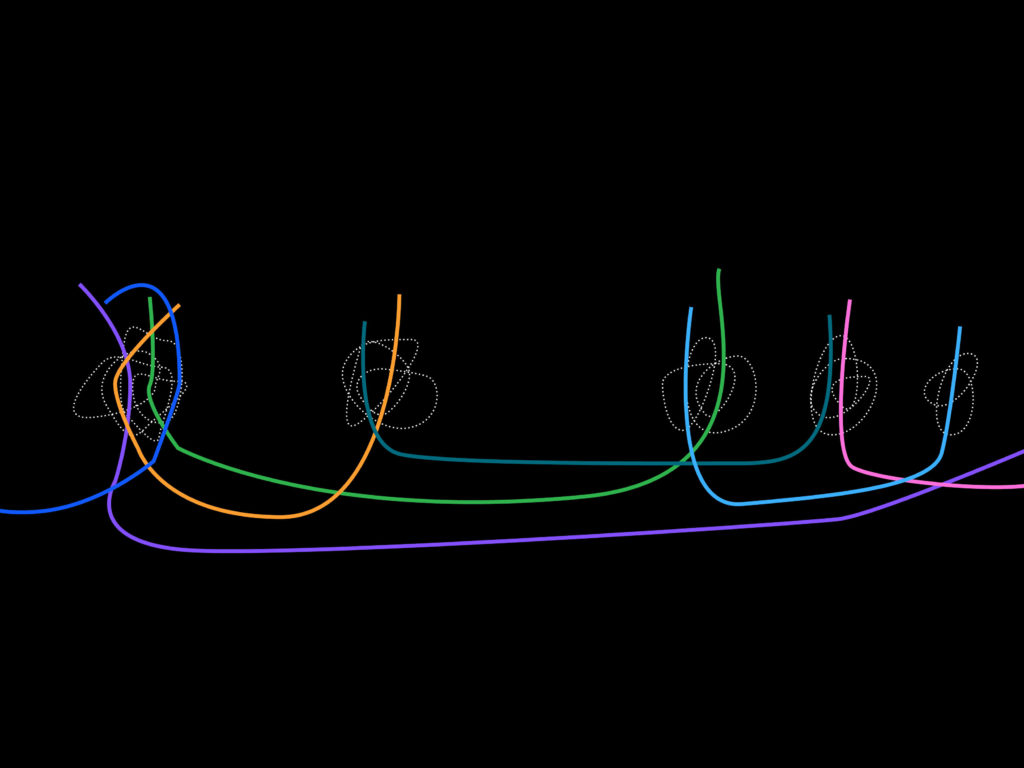

The web-doc will present series of video pairings, juxtaposing the train and the station. The user interaction will focus on switching between or controlling the presence of the two videos.

Potential Shots

One camera is stationed in the train, and rides the line from one end to the other. This would be paired with fixed shots from several stations along the same line. These shots would have fleeting moments of shared movement but it would also highlight the disconnect/isolation within the train as the station shots picking up other trains (purple) while the “main character” train is only “knows about” its existence.

Two simultaneous videos of the train are shot from either end of the line. They overlap for a moment in the middle. But how does this relate to the station?

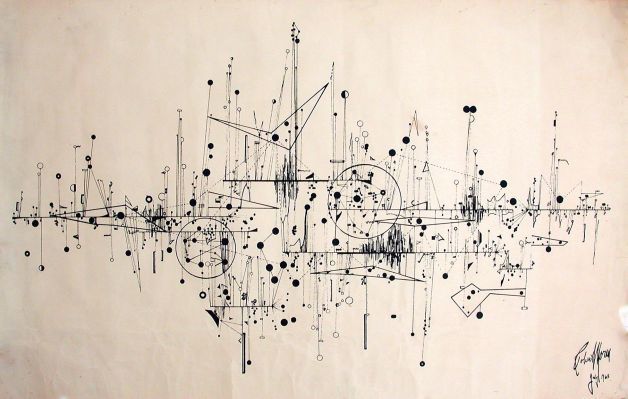

Audio

The audio track for each video pairing will provide an imagined narrative. But it will be constructed from overhead conversations recorded on the train, audio book recordings of what people are reading, clips from songs the subway-riders are listening to, etc. The composited audio hopes to bring together the paired videos into a single narrative.