Originally published in 1964, Marshall McLuhan’s sentiment that ‘the medium is the message’ is widely misunderstood as message as content. Note: perhaps this misreading is inevitable as McLuhan’s writing is laced with personalized jargon and somewhat lacks an easy-to-follow structure. As pointed out by W. Terrence Gordon in the 2003 critical edition imprint, McLuhan in fact dictated his text rather than wrote it.1

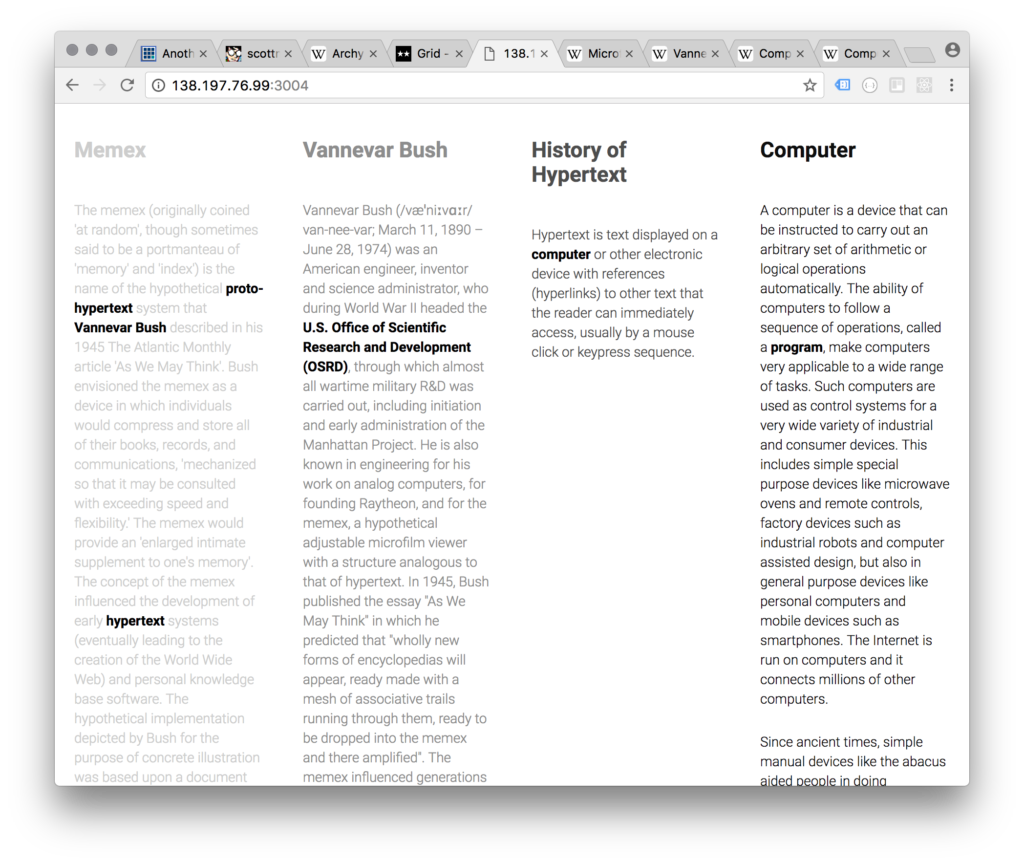

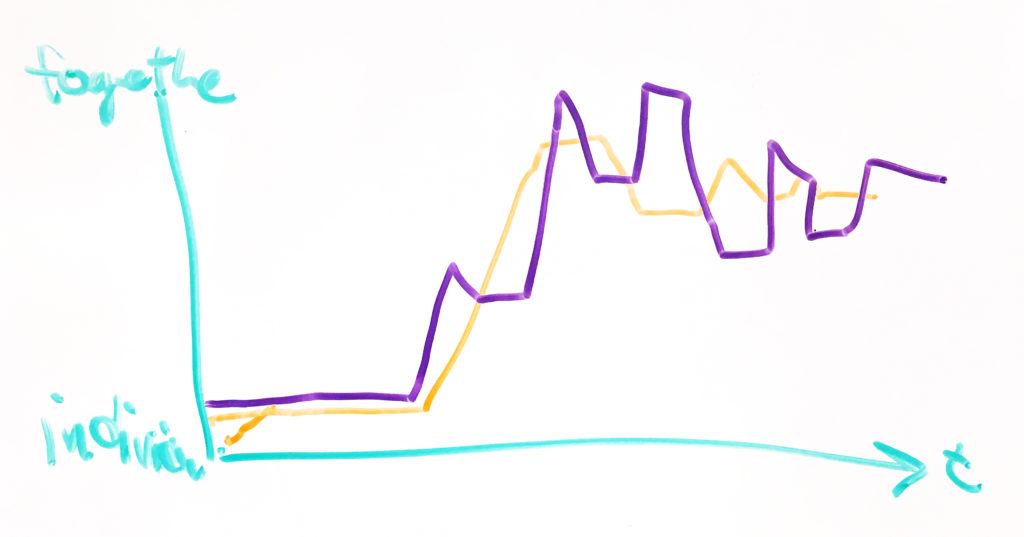

McLuhan argues that the “message” of a technological extension is actually the resulting change in human relationships, not how that technology is used. He distinguishes the “message”, or change associated with technology, from its “content”, or use, by describing the transition from the lineal connections of the mechanical age to the instant configurations of the electronic. He distinguishes the “message”, or change associated with technology, from its “content”, or use, by describing the transition from the lineal connections of the mechanical age to the instant configurations of the electronic. This distinction, however, has been mistaken because our literacy of a particular mechanical technology — typography — has been conflated with rationality; and thus, the “message” has been conflated with “content”. Instead, McLuhan asserts, the “content” of any technology is actually another technology.

Recognizing the Message

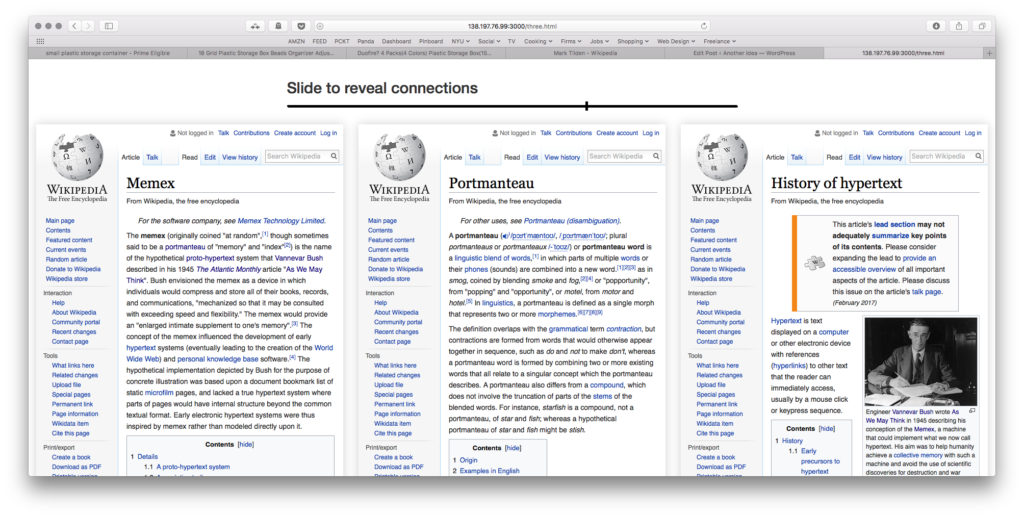

McLuhan argued that De Tocqueville is able to understand the grammar of typography (the medium and the message) because he stood outside of the structures being dismantled and the technology (print and typography) by “which their undoing occurred and could then see the ‘lines of force’ being discerned.” (McLuhan 2003:##) But, knowing that we are living within the constrains and associations introduced by a previous medium, is it possible to recognize the message of new media and change a technology introduces into society? Or, can it only be understood through an examination of the past?

If print and typography resulted in conflating reason with sequential and uniform – and thus inhibited an understanding of simultaneous configurations with obscured sequence, what is our current hinderance? Perhaps this is the challenge of discussing VR/AR/MR. Our understanding of its message–if it even has a message?–is frustrated by our current real-time-communication-informational(?) cultural bias. We are likely not yet far enough out of current media to understand its change of scale of relationships, let alone decipher the message of a potential future media.

Key Citations

“In terms of the ways in which the machine altered our relations to one another and to ourselves, it mattered not in the least whether it turned out cornflakes or Cadillacs.” (McLuhan 2003:19)

“The American stake in literacy as a technology or uniformity applied to every level of education, government, industry, and social life is totally threatened by the electric technology.” (McLuhan 2003:20)

“Cotton and oil, like radio and TV, become “fixed charges” on the entire psychic life of the community. And this pervasive fact creates the unique cultural flavor of any society. It pays through the nose and all its other sense for each stable that shapes its life.” (McLuhan 2003:35)

-

McLuhan, Marshall. “The Medium Is the Message.” Understanding Media: The Extensions of Man. Ed. W. Terrence Gordon. Berkeley: Gingko, 2003. 17-35. Print.