Routine Grid (034) studies Times Square from a fixed point-of-view, mapping change and routine over the course of five days.

Still frames were taken from a video feed and arranged chronologically, where each column represents a single day and rows show frames captured at the same time. The page scrolls automatically so users can focus on the difference and similarity within a day and between days. Does every time of day have a routine? Do the same people reappear?

People’s routines are made evident, but also routines of weather, advertisements, and furniture; of shadows, rain, and cold. The patterns repeat across days or within a day, an hour, a morning. Billboards reiterate their ads, but rarely at the same time every day. Regardless, their constant brightness makes time of day uncertain. Similarly, without the sky, weather is implied: by tone, shadow, or the wet reflection on the pavers.

Technicals

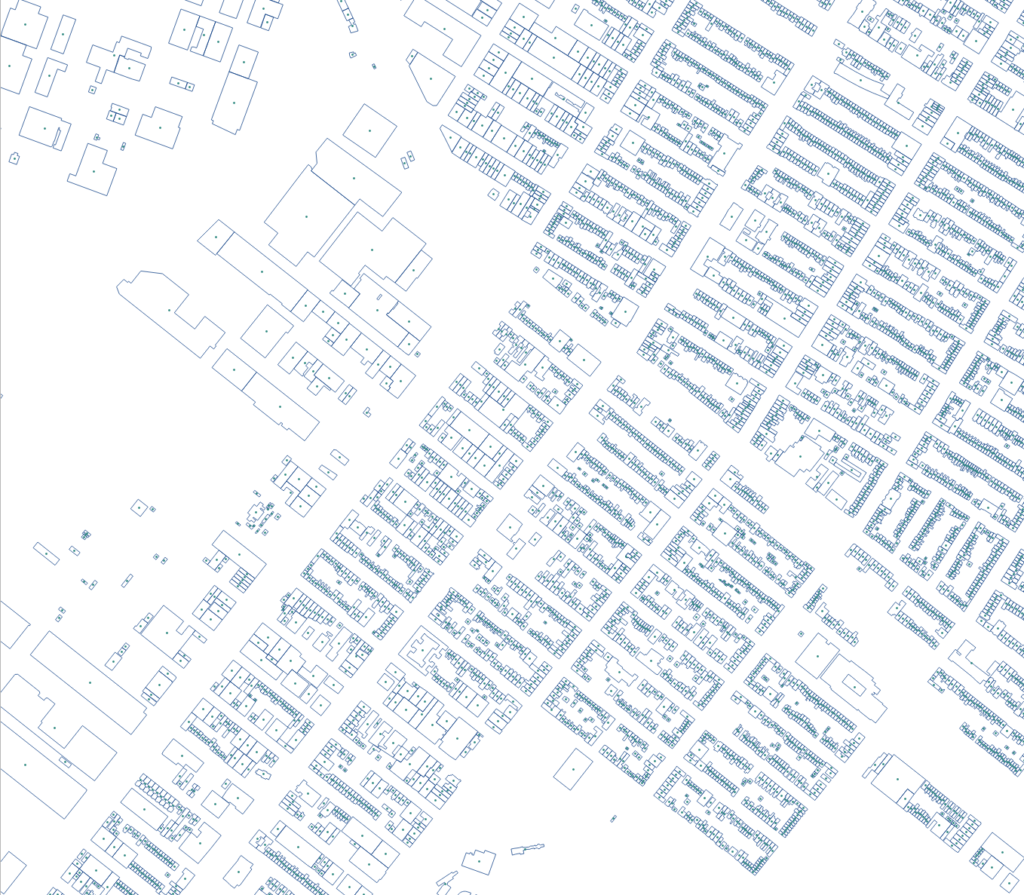

An applescript was set to capture frames from a live camera stream Times Square every 30 seconds over the course of a week. The “Lazy Load” library was used to incrementally load the images into the viewport. For autoscroll, scrollTop(currentPosition + 20) is called at a set interval.

Over 2,800 frames were collected each day, so a python script was created to automatically generate the HTML for each column. The script grabs the file names in a folder and loops through them to add the relevant HTML.

Next Steps

- Resolve loading issue: occasionally loads by column rather than row