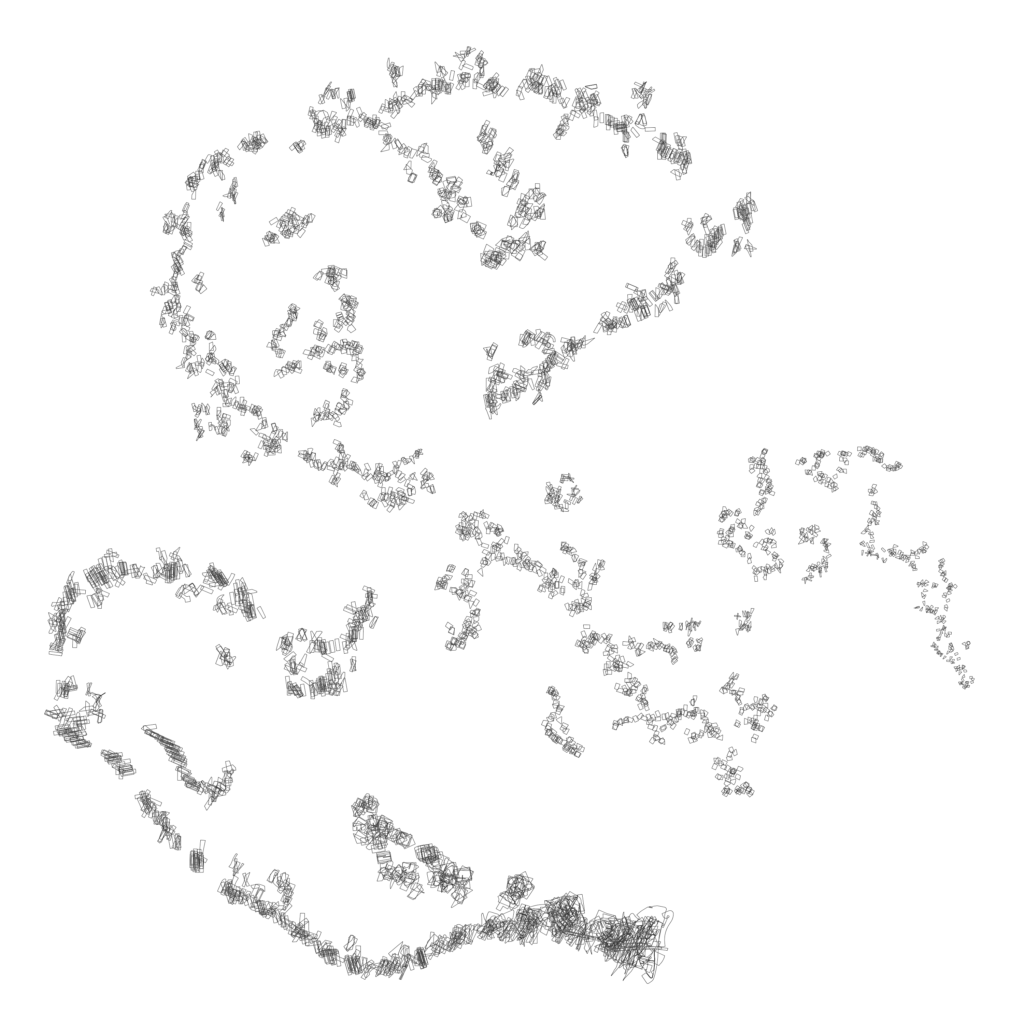

Looking for the Same is a plot of all the city blocks in the Bronx, where on hover, relative density describes the relationships of block shapes within a neighborhood.

The map uses multi-dimensional image moments to describe each block’s shape, and then t-SNE, a dimensionality reduction algorithm, to visualize these shape descriptions onto a two-dimensional plane. On hover, blocks within a neighborhood are highlighted. However, blocks are situated by similarity rather than geolocation, allowing users to compare the difference or sameness within each neighborhood. Like any visualization of an algorithmic process, it also prompts a comparison of how an algorithm finds “related” forms to our own identifications.

Technicals

To transform the block shapes into a data set, each block was saved as a black and white image from which Zernike moments were calculated. Image moments are a way of describing an image through different weightings of pixel intensities. Besides Zernike moments, Hu moments are also a popular set of invariant moments used for shape description within images. Zernike moments are particularly sensitive to the scale and translation of objects within images, but are invariant to rotation.

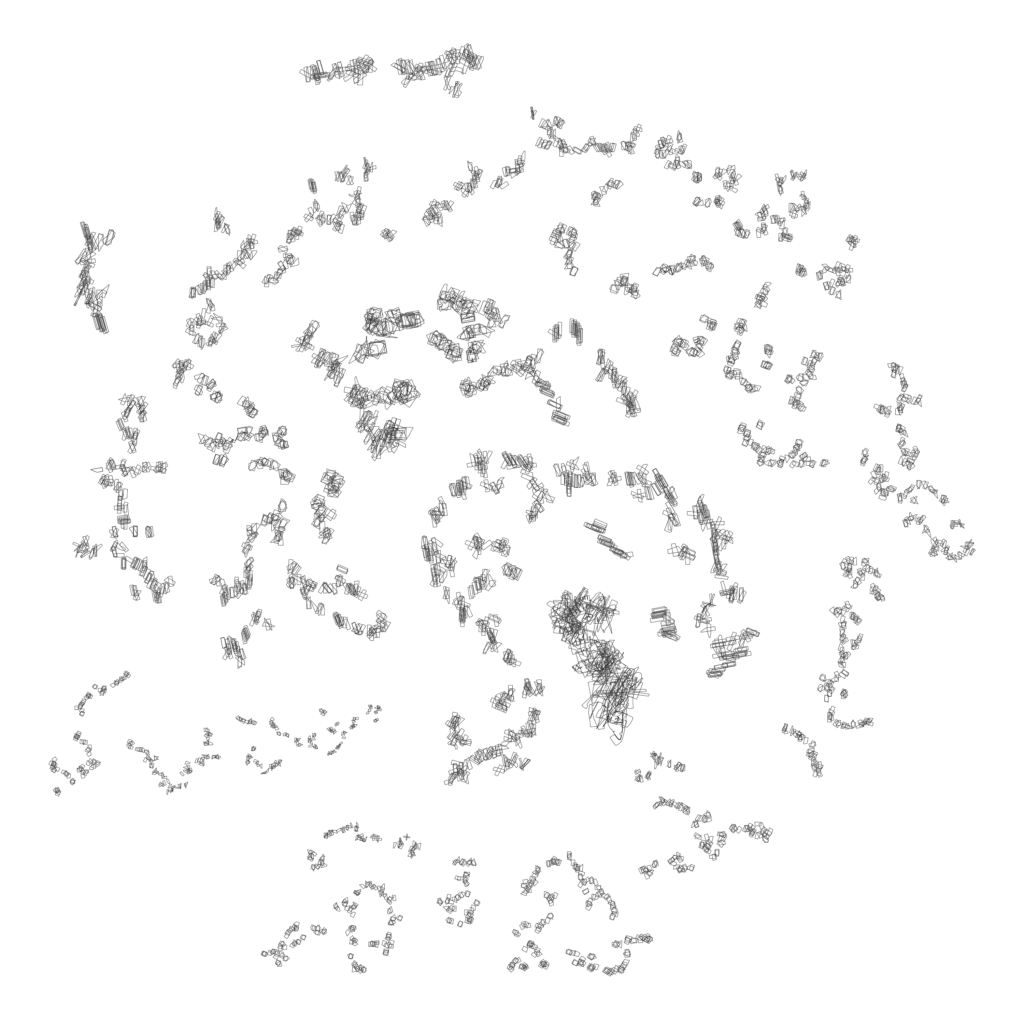

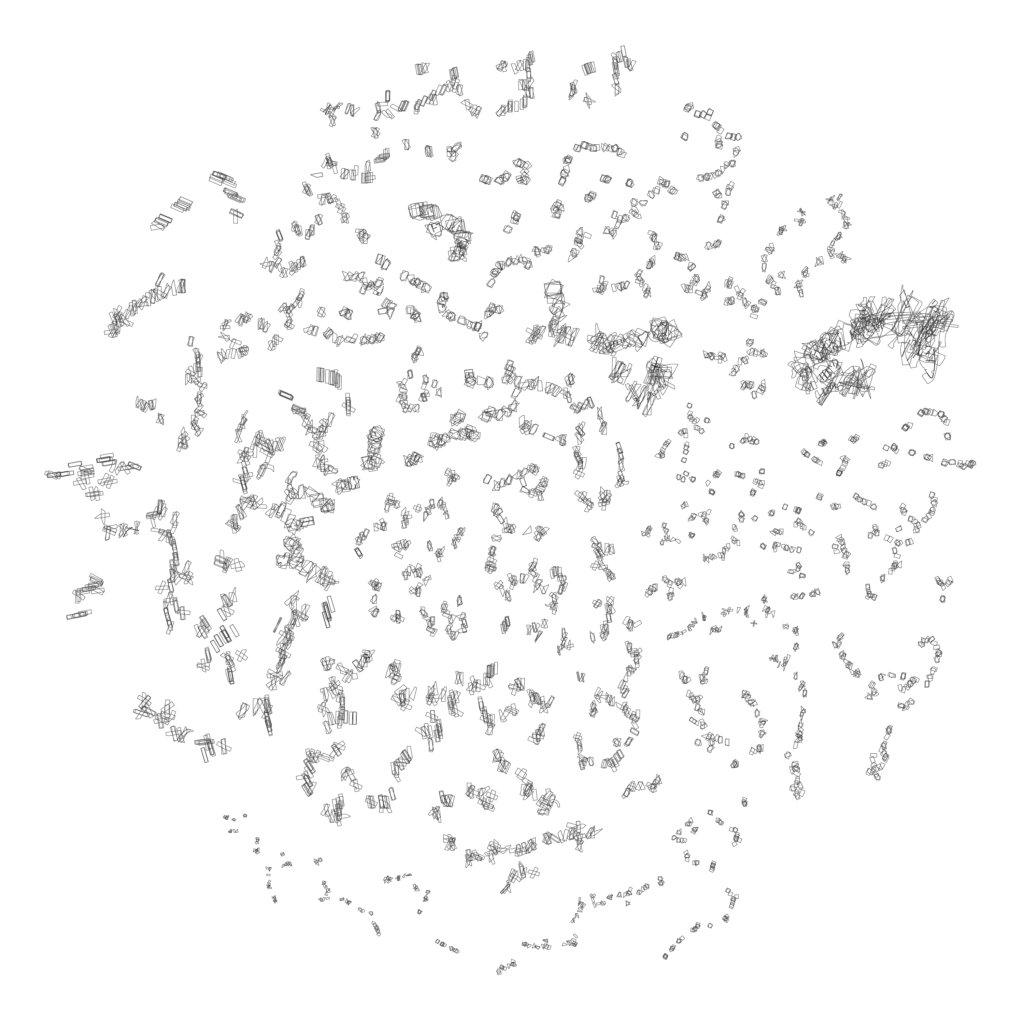

t-SNE is a dimensionality reduction algorithm that tries to find a faithful representation of high-dimensional points in a lower-dimensional space. However, as discussed by Wattenberg, Viegas and Johnson in “How To Use t-SNE Effectively“, t-SNE plots can be misleading. Even though shapes may be related, they may not appear side by side. t-SNE is highly affected by the perplexity hyperparameter, which balances between local and global aspects of the dataset. This map iteration uses a perplexity of 30. Visualizations using other perplexity values are shown below.

Next Steps

- Color-coding by similarity rather than neighborhood

- Test alternative dimensionality-reduction algorithms to compare layout

One thought on “Looking for the Same (058)”

Comments are closed.